Timeline of an ASWB Exam Guidebook cover-up.

Summary

Test-takers preparing for Association of Social Work Boards (ASWB) examinations in 2025 have been presented with conflicting information about how much time they are allotted for each section of the examination, the number of breaks provided, whether they can access all of half of their answers to review at the end of their exam, among other factors.

Contrary to best practices and testing standards, ASWB modified their Exam Guidebook without adequately notifying stakeholders–test-takers, boards, and the public. By using the Internet Archive’s Wayback Machine to view earlier versions of ASWB’s Exam Guidebook and website, one can uncover a clear timeline for exam policy changes. It demonstrates no examinee in April or May was adequately notified or provided appropriate documentation to prepare for the exam.

On or about April 14, 2025 ASWB updated the language in the Exam Guidebook to match the policy they announced in a April 10, 2025 blog post, and according to social media reports from test-takers, implemented on or around March 30, 2025.

No test-taker has been given enough time to prepare for the new exam format. Furthermore, ASWB has not provided social work boards with any psychometric evidence that the new two-section format of the examination is psychometrically equivalent to one in which you could return to any item at any time. Many boards appeared as blindsided by the policy changes as test-takers were.

Fundamentally, this is now how examination programs are supposed to operate. It is obviously unethical (and psychometrically invalid, unfair, and unreliable) to change the rules of an examination without adequate notification. Social workers spend thousands of dollars and months of their time to prepare for the examination.

At minimum, the ASWB examination program requires a two-month moratorium period during which psychometricians can ensure the exam formats are equivalent and test-takers can have adequate time to study using the new ruleset. Anything less continues with the cowboy calculus and regulatory recklessness that pervades social work licensure examinations.

ASWB produced two Exam Guidebooks in 2025

The PSI guidebook is valid until March 16, 2025. Then, ASWB exams took a two week break. The PearsonVUE guidebook is valid after March 30, 2025.

Visible in this March 11, 2025 screenshot from the Internet Archive:

In this February 12, 2025 screenshot from the Internet Archive, there is one set of policies:

Exam Policy Rule Set # 1

(aswb.org/…/2023/10/ASWB-Exam-Guidebook.pdf)

- Valid through October 2024-March 16, 2025

- “You may skip questions and go back to them later, flag questions for review, highlight text, and go back and change answers”

- “You may take breaks of up to 10 minutes during the four-hour exam at your discretion. Testing time does not stop for breaks.”

Archived January 21, 2025 by web.archive.org.

This version of the handbook accurately described the exam as occurring in a single, uninterrupted period. Examinees could take informal breaks. However, the clock would continue to run, and the full length of the exam would remain accessible for review.

This file was created on November 21, 2024. Accessing the same file in 2025 from the ASWB website, it looks very different!

Sneak-Revision: ASWB revised & reuploaded (2023/10/ASWB-Exam-Guidebook.pdf) in April 2025

This is how the Exam Guidebook for this period currently appears on ASWB’s website.

Source: https://www.aswb.org/wp-content/uploads/2023/10/ASWB-Exam-Guidebook.pdf

Using the PDF metadata, it is clear that ASWB included language about exam halves and breaks after the policies went into effect.

I believe ASWB used the same file names when retroactively documenting these changes to obscure their potential impact to test-takers, boards, and other stakeholders.

The Internet Archive has not crawled the ASWB Exam Guidebook from January 21, 2025-May 16, 2025. I am unable to verify when the version of the Exam Guidebook created on April 14, 2025 was shared with the public prior to when I downloaded it on May 16, 2025.

“I could have sworn the ASWB Exam Guidebook used to say something else!”

– April & May test-takers

Correct.

On April 14, 2025, ASWB retroactively changed their deprecated test documentation (valid October 2024-March 16, 2025) to align with the policy changes announced on April 10, 2025.

Watch Dana Krobin, LMSW’s testimony at the April 30th Meeting of the Social Work Licensure Workgroup in Maryland.

Exam Policy Rule Set # 2

(aswb.org/…/2025/02/2025-ASWB-Examination-Guidebook-Pearson-VUE.pdf)

- Presented to test-takers for Pearson VUE between February 28, 2025 and April 14, 2025.

- “You may skip questions and go back to them later, flag questions for review, highlight text, and go back and change answers”

- “There are two types of breaks that you may take during your exam, a scheduled 10-minute break and unscheduled breaks.”

- “You will be given the entire exam time at the beginning of the test.”

- “There are no individually timed sections, so manage your time accordingly.”

Here is how ASWB’s Exam Guidebook appears in the April 6, 2025 snapshot in the Internet Archive:

Sneak-Revision: ASWB revised & reuploaded (2025/02/2025-ASWB-Examination-Guidebook-Pearson-VUE.pdf) in April 2025

The Exam Guidebook PDF crawled by the Internet Archive on April 6, 2025 was created on February 26, 2025 and last edited on April 2, 2025.

The same file, downloaded from ASWB’s website on May 15, 2025, was created on April 14, 2025.

This is the same day that ASWB’s deprecated documentation was changed to align with the April 10, 2025 announcement of exam administration policy changes.

April 14, 2025 was a busy day for ASWB. They created and modified two new exam guidebooks, which are now available on their website. No revision date is visible on either document.

Exam Policy Rule Set # 3

(aswb.org/…/2025/02/2025-ASWB-Examination-Guidebook-Pearson-VUE.pdf)

- Presented to test-takers for Pearson VUE between April 14, 2025 and today

- “The exam is divided into two 85-question sections, each of which has a two-hour time limit.”

- “After you have completed the first section, you will not be able to return to it.”

Why it matters

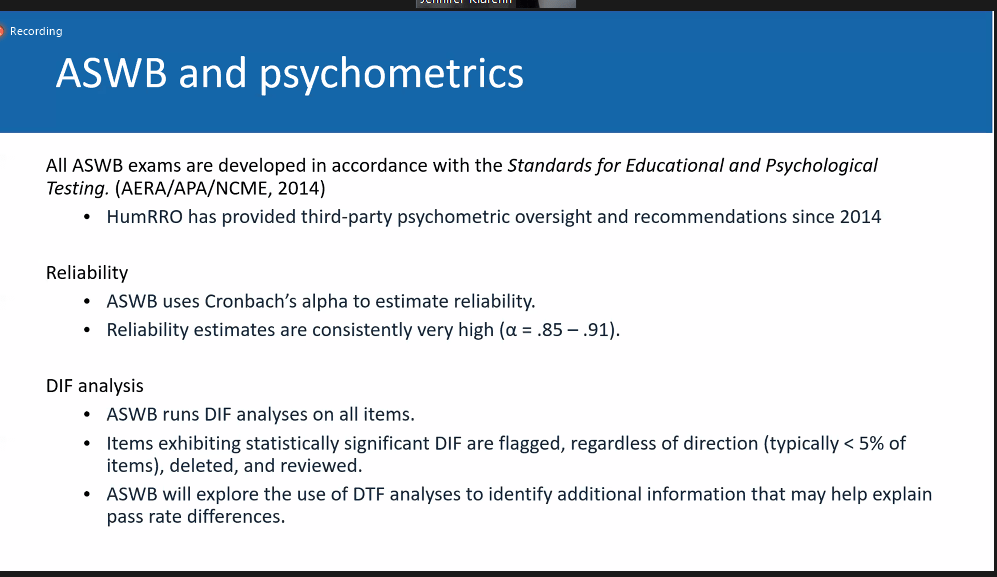

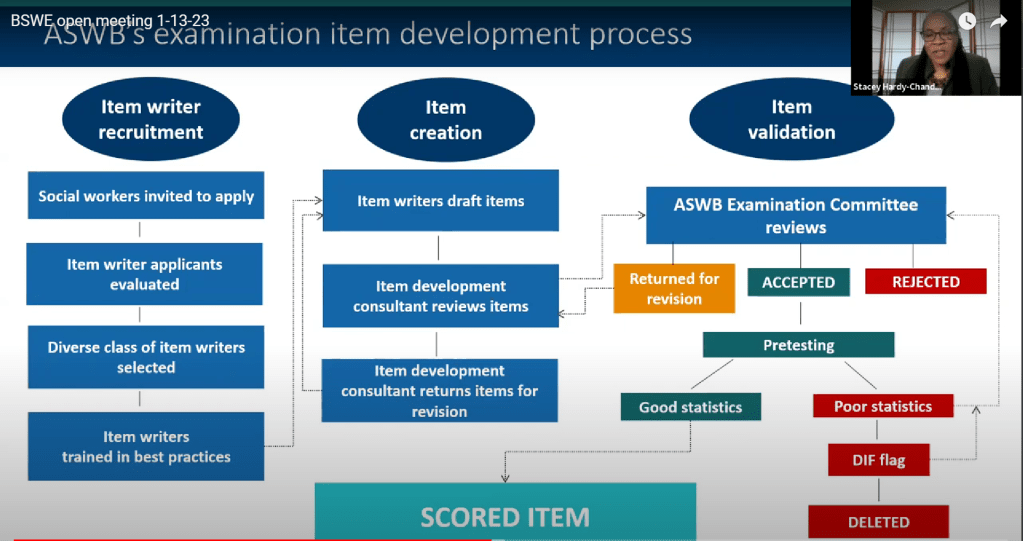

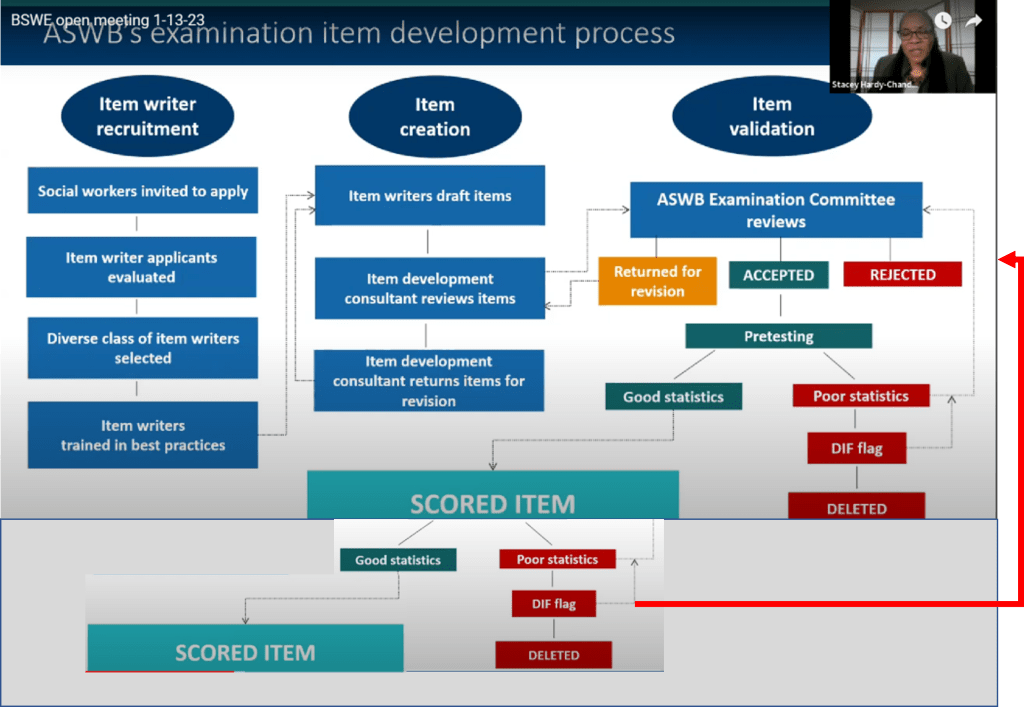

Examinees in any high-stakes exam are entitled to current and accurate information about the examination process. Furthermore, when substantive changes are made to that process, the exam developer is required to first establish measurement equivalency between the old and new processes. For April & May 2025 examinees, ASWB met neither of those obligations. These obligations are set by the AERA Standards for Educational and Psychological Testing. Test developers must abide by those standards as a foundational element of the legal defensibility of a high-stakes exam.

While the addition of a scheduled break is likely a welcomed change for many examinees, it is unclear how it may impact exam results. To the degree examinees are refreshed and able to refocus after a break, performance may improve. At the same time, for those examinees who struggle with time management or test anxiety, having a clock running down toward zero twice in their exam process instead of once may negatively impact performance.

In addition, the forced-break process is actually likely to shorten the total exam time taken by examinees. Any remaining time at the completion of the first section is not carried over to the second half of the test. Remaining time from either half that the examinee might otherwise have used to review (and, if necessary, change) responses to items in the other half of the exam cannot be used for that purpose.

Because ASWB apparently failed to meaningfully investigate the impacts of this structural change prior to implementing it, the effects on performance remain unknown. If boards had modified exam timing without ASWB’s approval, they would need to demonstrate their policies did not substantively impact test scores; yet, boards allow ASWB to make these changes without demonstrating measurement equivalence.

Ultimately, examinees cannot appropriately prepare themselves for an exam where the structure is unclear or is meaningfully different from what they were told it would be. Examinees who believed that they could return to all questions at the end of the exam, and followed ASWB’s explicit guidance to prepare themselves accordingly, may have had their results negatively impacted by novel time and break policy.

ASWB and its member boards have a duty to examinees to address the harms done by these shifting descriptions of the exam process, and to ensure a more professional process moving forward, in accordance with the AERA Standards.

Standard 4.4

If test developers prepare different versions of a test with some change to the test specifications, they should document the content and psychometric specifications of each version. The documentation should describe the impact of differences among versions on the validity of score interpretations for intended uses and on the precision and comparability of scores.

Comment: Test developers may have a number of reasons for creating different versions of a test, such as allowing different amounts of time for test administration by reducing or increasing the number of items on the original test, or allowing administration to different populations by translating test questions into different languages.

Test developers should document the extent to which the specifications differ from those of the original test, provide a rationale for the different versions, and describe the implications of such differences for interpreting the scores derived from the different versions.

Test developers and users should monitor and document any psychometric differences among versions of the test based on evidence collected during development and implementation. Evidence of differences may involve judgments when the number of examinees receiving a particular version is small (e.g., a braille version). Note that these requirements are in addition to the normal requirements for demonstrating the equivalency of scores from different forms of the same test.

Standard 6.5

Test takers should be provided appropriate instructions, practice, and other support necessary to reduce construct-irrelevant variance.

Comment: Instructions to test takers should clearly indicate how to make responses, except when doing so would obstruct measurement of the intended construct (e.g., when an individual’s spontaneous approach to the test-taking situation is being assessed). Instructions should also be given in the use of any equipment or software likely to be unfamiliar to test takers, unless accommodating to unfamiliar tools is part of what is being assessed. The functions or interfaces of computer-administered tests may be unfamiliar to some test takers, who may need to be shown how to log on, navigate, or access tools. Practice opportunities should be given when equipment is involved, unless use of the equipment is being assessed. Some test takers may need practice responding with particular means required by the test, such as filling in a multiple-choice “bubble” or interacting with a multimedia simulation…In addition, test takers should be clearly informed on how their rate of work may affect scores, and how certain responses, such as not responding, guessing, or responding incorrectly, will be treated in scoring, unless such directions would undermine the construct being assessed.

Standard 7.8

Test documentation should include detailed instructions on how a test is to be administered and scored.

Comment: Regardless of whether a test is to be administered in paper-and-pencil format, computer format, or orally, or whether the test is performance based, instructions for administration should be included in the test documentation. As appropriate, these instructions should include all factors related to test administration, including qualifications, competencies, and training of test administrators; equipment needed; protocols for test administrators; timing instructions; and procedures for implementation of test accommodations. When available, test documentation should also include estimates of the time required to administer the test to clinical, disabled, or other special populations for whom the test is intended to be used, based on data obtained from these groups during the norming of the test.